toPandas Datetime Error

I tried and failed to convert a PySpark DataFrame that I was working in to one in pandas for the better part of an hour tonight.

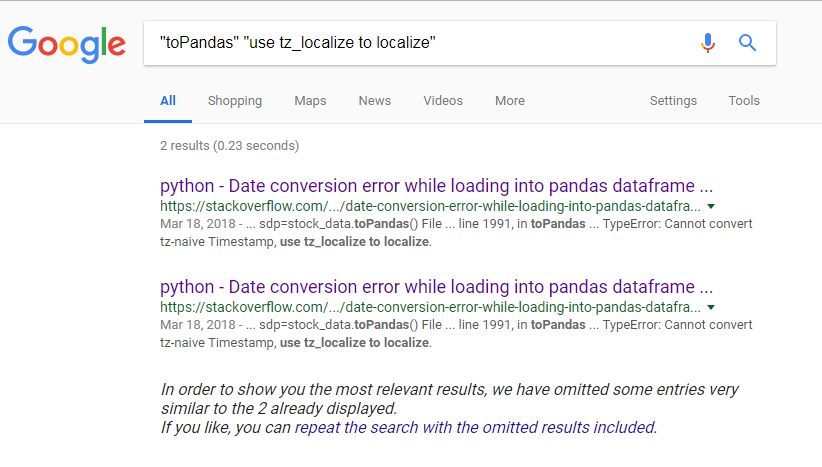

Ultimately figured out a naive workaround and wanted to leave a solution behind for anybody googling the error message

TypeError: Cannot convert tz-naive Timestamp, use tz_localize to localize

This poor soul was running into the same issue a few months ago, and it’s, hilariously, the only hit you get when looking up this issue on the whole, wide Internet.

from IPython.display import Image

Image('../images/errormsg.PNG')

Follow the Docs

import findspark

findspark.init()

import pyspark

sc = pyspark.SparkContext()

spark = pyspark.sql.SparkSession(sc)If you look at the PySpark documentation around this function, they have a super-vanilla example that takes a simple table that looks like this

from pyspark.sql import Row

data = [Row(name='Alice', age=2),

Row(name='Bob', age=5)]

pysparkDf = spark.createDataFrame(data)pysparkDf.show()+---+-----+

|age| name|

+---+-----+

| 2|Alice|

| 5| Bob|

+---+-----+

and pipes it, all nice and neat, into a pandas.DataFrame so we can do some rich data analysis. For my money, this is probably the best real-world example I’ve seen in any documentation.

df = pysparkDf.toPandas()

df| age | name | |

|---|---|---|

| 0 | 2 | Alice |

| 1 | 5 | Bob |

Recreate the Problem

Imagine, however, that your data looks like something closer to a server log, and there’s a third field, sessionDt that gets captured as well.

from datetime import datetime

data = [Row(name='Alice', age=2, sessionDt=datetime.now()),

Row(name='Bob', age=5, sessionDt=datetime.now())]

pysparkDf = spark.createDataFrame(data)Now we’ve got 3 columns

pysparkDf.show()+---+-----+--------------------+

|age| name| sessionDt|

+---+-----+--------------------+

| 2|Alice|2018-06-06 21:20:...|

| 5| Bob|2018-06-06 21:20:...|

+---+-----+--------------------+

One of which is of type timestamp

pysparkDf.dtypes[('age', 'bigint'), ('name', 'string'), ('sessionDt', 'timestamp')]

And pandas loses its damn mind.

pysparkDf.toPandas()---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-9-c829e1f7ed66> in <module>()

----> 1 pysparkDf.toPandas()

C:\opt\Spark\spark-2.3.0-bin-hadoop2.7\python\pyspark\sql\dataframe.py in toPandas(self)

1989 if isinstance(field.dataType, TimestampType):

1990 pdf[field.name] = \

-> 1991 _check_series_convert_timestamps_local_tz(pdf[field.name], timezone)

1992 return pdf

1993

C:\opt\Spark\spark-2.3.0-bin-hadoop2.7\python\pyspark\sql\types.py in _check_series_convert_timestamps_local_tz(s, timezone)

1835 :return pandas.Series where if it is a timestamp, has been converted to tz-naive

1836 """

-> 1837 return _check_series_convert_timestamps_localize(s, None, timezone)

1838

1839

C:\opt\Spark\spark-2.3.0-bin-hadoop2.7\python\pyspark\sql\types.py in _check_series_convert_timestamps_localize(s, from_timezone, to_timezone)

1821 # `s.dt.tz_localize('tzlocal()')` doesn't work properly when including NaT.

1822 return s.apply(

-> 1823 lambda ts: ts.tz_localize(from_tz, ambiguous=False).tz_convert(to_tz).tz_localize(None)

1824 if ts is not pd.NaT else pd.NaT)

1825 else:

C:\Users\Nick\Anaconda3\lib\site-packages\pandas\core\series.py in apply(self, func, convert_dtype, args, **kwds)

2353 else:

2354 values = self.asobject

-> 2355 mapped = lib.map_infer(values, f, convert=convert_dtype)

2356

2357 if len(mapped) and isinstance(mapped[0], Series):

pandas/_libs/src\inference.pyx in pandas._libs.lib.map_infer()

C:\opt\Spark\spark-2.3.0-bin-hadoop2.7\python\pyspark\sql\types.py in <lambda>(ts)

1822 return s.apply(

1823 lambda ts: ts.tz_localize(from_tz, ambiguous=False).tz_convert(to_tz).tz_localize(None)

-> 1824 if ts is not pd.NaT else pd.NaT)

1825 else:

1826 return s

pandas/_libs/tslib.pyx in pandas._libs.tslib.Timestamp.tz_convert (pandas\_libs\tslib.c:13875)()

TypeError: Cannot convert tz-naive Timestamp, use tz_localize to localize

Timestamp?

And so you ensure that your SparkSession exists in some localized timestamp

spark.conf.get('spark.sql.session.timeZone')'America/New_York'

Ah. Maybe my computer’s just confused about where it’s at.

spark.conf.set('spark.sql.session.timeZone', 'America/Detroit')spark.conf.get('spark.sql.session.timeZone')'America/Detroit'

And you run it again

data = [Row(name='Alice', age=2, sessionDt=datetime.now()),

Row(name='Bob', age=5, sessionDt=datetime.now())]

try:

pysparkDf = spark.createDataFrame(data)

pysparkDf.toPandas()

except:

print('Lol, nope')Lol, nope

Save me, Unix

And so you think to yourself, “well maybe if I convert it to unix…”

from pyspark.sql import functions as F

unix = pysparkDf.withColumn('sessionDt', F.unix_timestamp('sessionDt'))

unix.collect()[Row(age=2, name='Alice', sessionDt=1528334487),

Row(age=5, name='Bob', sessionDt=1528334487)]

”…And then try and convert it back to a timestamp…”

notUnix = unix.withColumn('sessionDt', F.from_unixtime(unix['sessionDt']))

notUnix.collect()[Row(age=2, name='Alice', sessionDt='2018-06-06 21:21:27'),

Row(age=5, name='Bob', sessionDt='2018-06-06 21:21:27')]

”…maybe it will just work for some reason?”

notUnix.toPandas()| age | name | sessionDt | |

|---|---|---|---|

| 0 | 2 | Alice | 2018-06-06 21:21:27 |

| 1 | 5 | Bob | 2018-06-06 21:21:27 |

I’m going to throw up. That worked?

noWay = notUnix.toPandas()

noWay.head()| age | name | sessionDt | |

|---|---|---|---|

| 0 | 2 | Alice | 2018-06-06 21:21:27 |

| 1 | 5 | Bob | 2018-06-06 21:21:27 |

How in the hell did that work??

Oh…

noWay.dtypesage int64

name object

sessionDt object

dtype: object

Because sessionDt read in as a string.

Actually…

That’s not the stupidest idea I’ve ever had.

import pandas as pd

df = pysparkDf.withColumn('sessionDt',

pysparkDf['sessionDt'].cast('string')).toPandas()

df['sessionDt'] = pd.to_datetime(df['sessionDt'])

df| age | name | sessionDt | |

|---|---|---|---|

| 0 | 2 | Alice | 2018-06-06 21:21:27.981390 |

| 1 | 5 | Bob | 2018-06-06 21:21:27.981390 |

df.dtypesage int64

name object

sessionDt datetime64[ns]

dtype: object