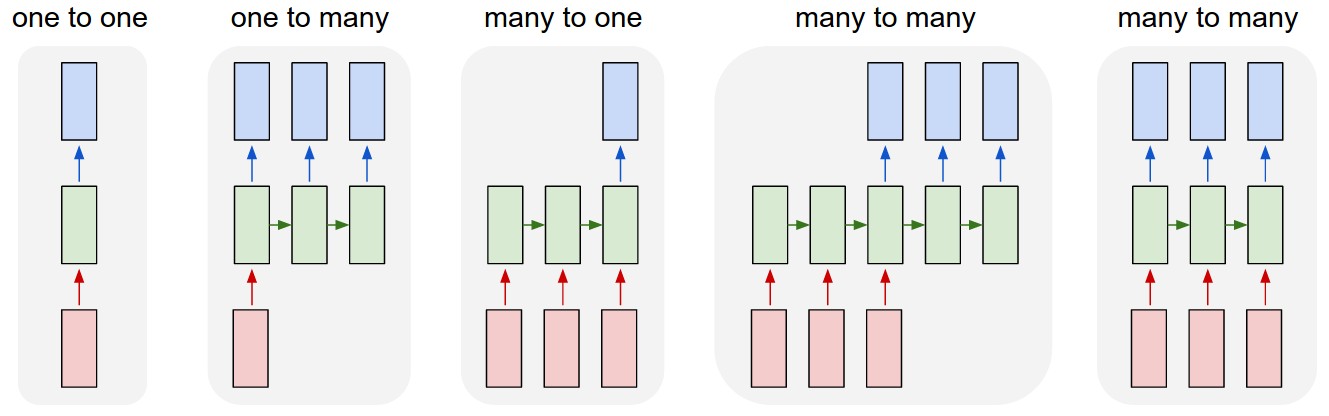

Sentiment Classification

Given a Word Embedding that’s compatible with our corpus, could make a reasonably-accurate sentiment classifier by taking a naive average across all the encoding vectors in our sentence.

from IPython.display import Image

Image('images/sentiment_naive.png')

However, this approach falls short when you have an important context word that negates the rest of your sentence. For instance in the sentence

Completely lacking in good taste, good service, and good ambiance

The word “good” appears three times and likely out-weighs the negative word “lacking” enough that you might predict a “fair” to “good” sentiment.

Instead

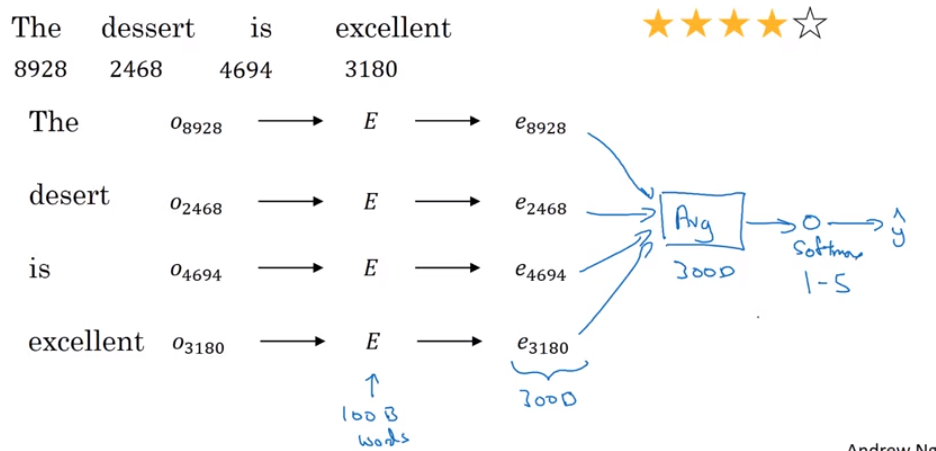

A better approach here would be to run your embeddings through some sort of Recurrent Neural Network to pass information from word to word, allowing us to hold onto the negation-effects of “lacking”

Ultimately manifests as a “many to one” RNN

from IPython.display import Image

Image('images/rnn_types.png')